I’m really happy to announce another update of the aX Plugins. There are some bigger and smaller updates to all plugins. These updates add new features, make the plugins faster and easier to use and refresh the interface.

You can download the updated versions from your account.

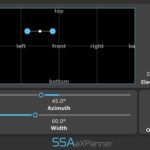

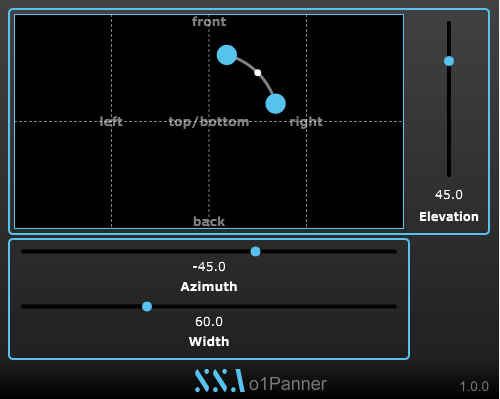

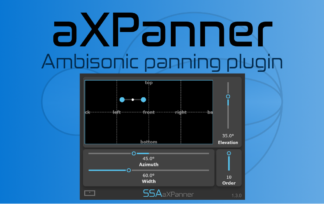

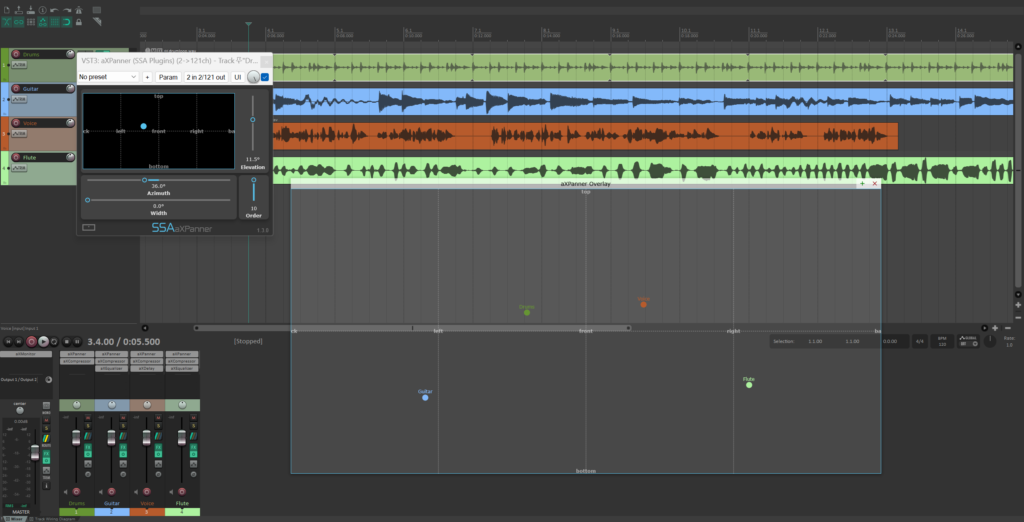

aXPanner – New Feature!

Following user requests, aXPanner now has a new resizable pop-out window that you can use to overlay on 360 videos or images. It also gathers all instances of the plugin in one place so you can get an overview of where all the other tracks in the project have been panned.

Each marker displays the name of the plugin’s track, so you always know what you’re working on. It also takes the colour your set the track in your DAW and uses that for the marker to make things even clearer and more convenient. You can also manually select the marker colour yourself, for extra customisation.

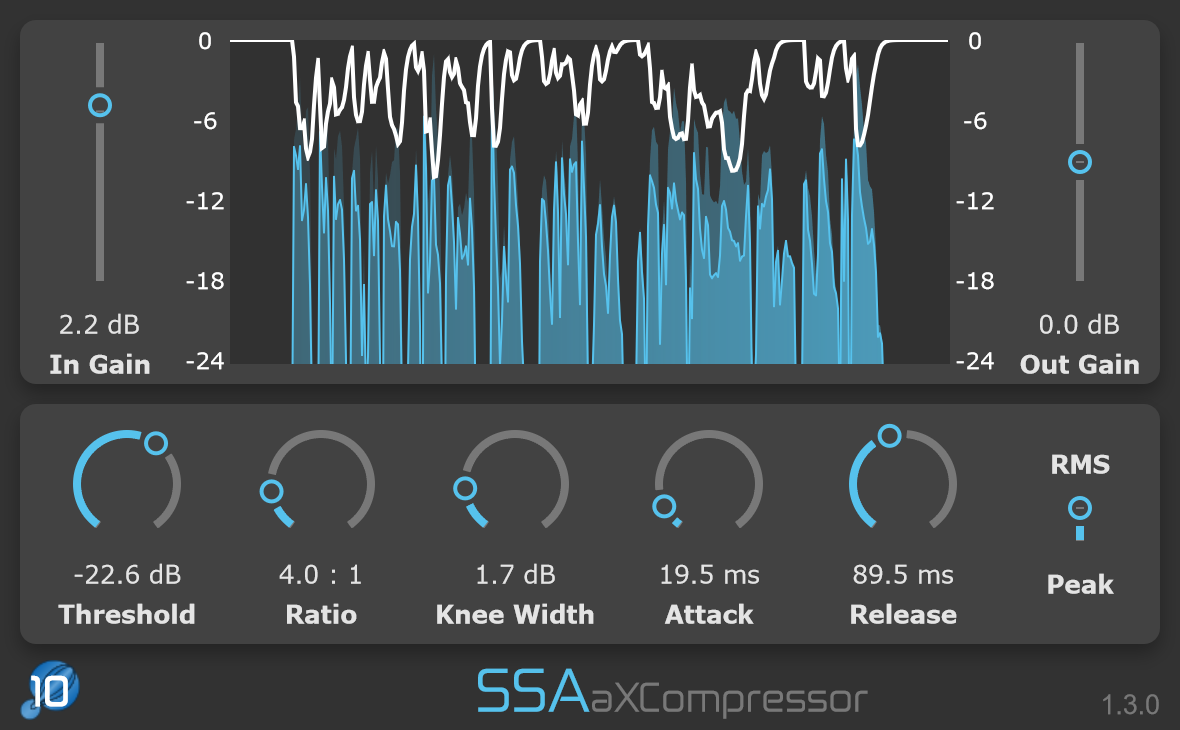

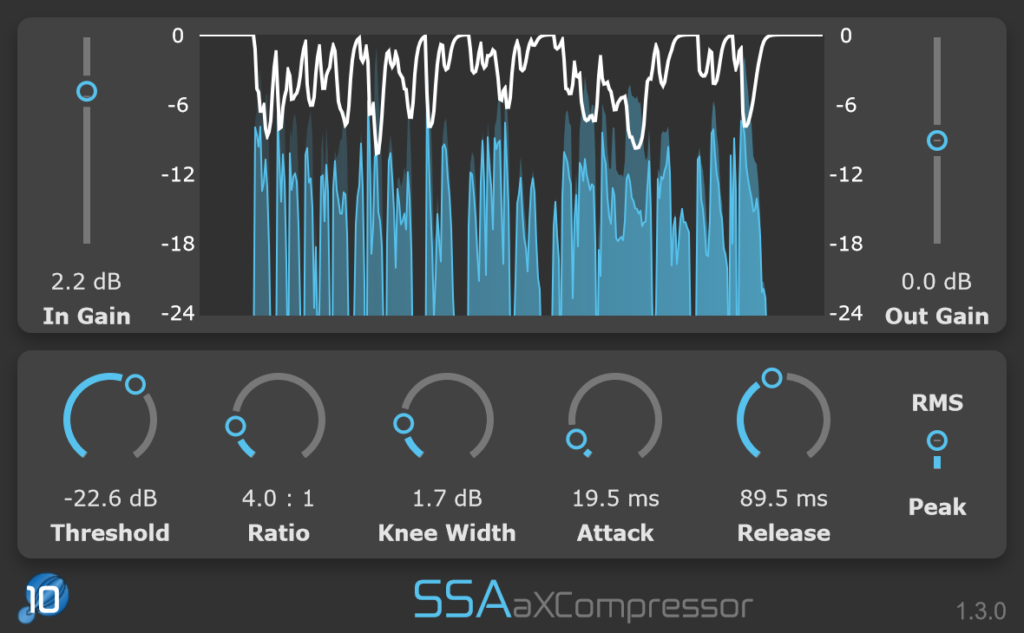

aXCompressor and aXGate – Better Visualisation

aXCompressor and aXGate now have new gain-reduction meters. As well as just looking a bit nicer, they now show the input and output levels of the signals so you can get a really clear idea of just how much gain reduction you’re applying.

All Plugins – Pro Tools Automation Shortcut

This was another user requested feature and is a quality-of-life improvement for Pro Tools users: All plugins now support the Ctrl+Win+Click (Windows) and Ctrl+Cmd+Click (Mac) shortcut to open the automation lane automatically.

To use it you have to do one of two things:

- Enable each parameter individually using the following shortcuts while clicking the parameter you want to automate: Ctrl+Win+Alt+Click (Windows) or Ctrl+Cmd+Alt+Click. This brings up a pop-up that lets you enable the parameter. Now you can bring up the automation lane using Ctrl+Win+Click (Windows) or Ctrl+Cmd+Click (Mac)

- (Preferred) Enable “Plug-in Control Default to Auto-Enabled” in Preferences > Mixing in Pro Tools. Any new plugins you insert will have automation controls already enabled.

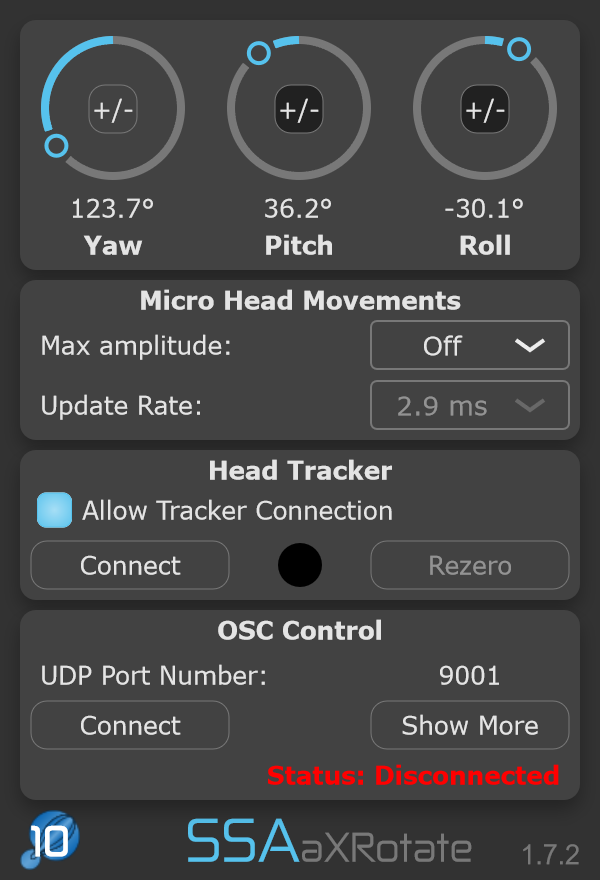

All Plugins – GUI Refresh

All of the plugins have had a light GUI refresh to make them a little sleeker and more modern looking. It’s subtle but I think makes a big improvement. Can you spot the changes?

What Do You Want To See?

Do you have any features you’d like to see in a future version of the plugins? Some small thing that would make your life easier? A cool feature that would make working with them even more fun? If so, get in touch with me and I’ll do my best to make sure it happens!